1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

| #进入容器

[root@iot-32 mongo]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

71e093a630c1 mongo:latest "docker-entrypoint.s…" 11 minutes ago Up 11 minutes 27017/tcp mongo_cluster_mongo1.1.hsbms8e5n9likecljqin8po78

docker exec -it 71e093a630c1 /bin/bash

# 进入命令行

mongosh

root@71e093a630c1:/# mongosh

Current Mongosh Log ID: 661c9b3dab16df54397b2da8

Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000&appName=mongosh+2.2.2

Using MongoDB: 7.0.8

Using Mongosh: 2.2.2

**************************************************************************************************************

# 定义config

test> config={_id:"mongo",members:[{_id:0,host:"mongo1:27017"},{_id:1,host:"mongo2:27017"},{_id:2,host:"mongo3:27017","arbiterOnly" : true}]}

{

_id: 'mongo',

members: [

{ _id: 0, host: 'mongo1:27017' },

{ _id: 1, host: 'mongo2:27017' },

{ _id: 2, host: 'mongo3:27017', arbiterOnly: true }

]

}

# 初始化

test> rs.initiate(config)

{ ok: 1 }

# 查看集群状态

mongo [direct: secondary] test> rs.status()

{

set: 'mongo',

date: ISODate('2024-04-15T03:14:32.851Z'),

。。。

members: [

{

_id: 0,

name: 'mongo1:27017',

。。。

{

_id: 1,

name: 'mongo2:27017',

。。。

},

{

_id: 2,

name: 'mongo3:27017',

。。。

}

],

ok: 1,

。。。

}

# 接下来修改mongodb 权重 因为不想集群重启之后主节点改变

# 重新赋值

mongo [direct: secondary] test>cfg=rs.config()

{

_id: 'mongo',

version: 1,

term: 1,

members: [

{

_id: 0,

host: 'mongo1:27017',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 1,

tags: {},

secondaryDelaySecs: Long('0'),

votes: 1

},

{

_id: 1,

host: 'mongo2:27017',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 1,

tags: {},

secondaryDelaySecs: Long('0'),

votes: 1

},

{

_id: 2,

host: 'mongo3:27017',

arbiterOnly: true,

buildIndexes: true,

hidden: false,

priority: 0,

tags: {},

secondaryDelaySecs: Long('0'),

votes: 1

}

],

protocolVersion: Long('1'),

writeConcernMajorityJournalDefault: true,

settings: {

chainingAllowed: true,

heartbeatIntervalMillis: 2000,

heartbeatTimeoutSecs: 10,

electionTimeoutMillis: 10000,

catchUpTimeoutMillis: -1,

catchUpTakeoverDelayMillis: 30000,

getLastErrorModes: {},

getLastErrorDefaults: { w: 1, wtimeout: 0 },

replicaSetId: ObjectId('661c9b91e68c823d44507a9a')

}

}

# 修改权重

mongo [direct: primary] test> cfg.members[0].priority = 3

3

# 修改权重

mongo [direct: primary] test> cfg.members[1].priority = 2

2

# 重新加载权重信息

mongo [direct: primary] test> rs.reconfig(cfg)

{

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1713150935, i: 1 }),

signature: {

hash: Binary.createFromBase64('AAAAAAAAAAAAAAAAAAAAAAAAAAA=', 0),

keyId: Long('0')

}

},

operationTime: Timestamp({ t: 1713150935, i: 1 })

}

# 查看权重信息

mongo [direct: primary] test> rs.config()

{

_id: 'mongo',

version: 2,

term: 1,

members: [

{

_id: 0,

host: 'mongo1:27017',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 3,

tags: {},

secondaryDelaySecs: Long('0'),

votes: 1

},

{

_id: 1,

host: 'mongo2:27017',

arbiterOnly: false,

buildIndexes: true,

hidden: false,

priority: 2,

tags: {},

secondaryDelaySecs: Long('0'),

votes: 1

},

{

_id: 2,

host: 'mongo3:27017',

arbiterOnly: true,

buildIndexes: true,

hidden: false,

priority: 0,

tags: {},

secondaryDelaySecs: Long('0'),

votes: 1

}

],

protocolVersion: Long('1'),

writeConcernMajorityJournalDefault: true,

settings: {

chainingAllowed: true,

heartbeatIntervalMillis: 2000,

heartbeatTimeoutSecs: 10,

electionTimeoutMillis: 10000,

catchUpTimeoutMillis: -1,

catchUpTakeoverDelayMillis: 30000,

getLastErrorModes: {},

getLastErrorDefaults: { w: 1, wtimeout: 0 },

replicaSetId: ObjectId('661c9b91e68c823d44507a9a')

}

}

|

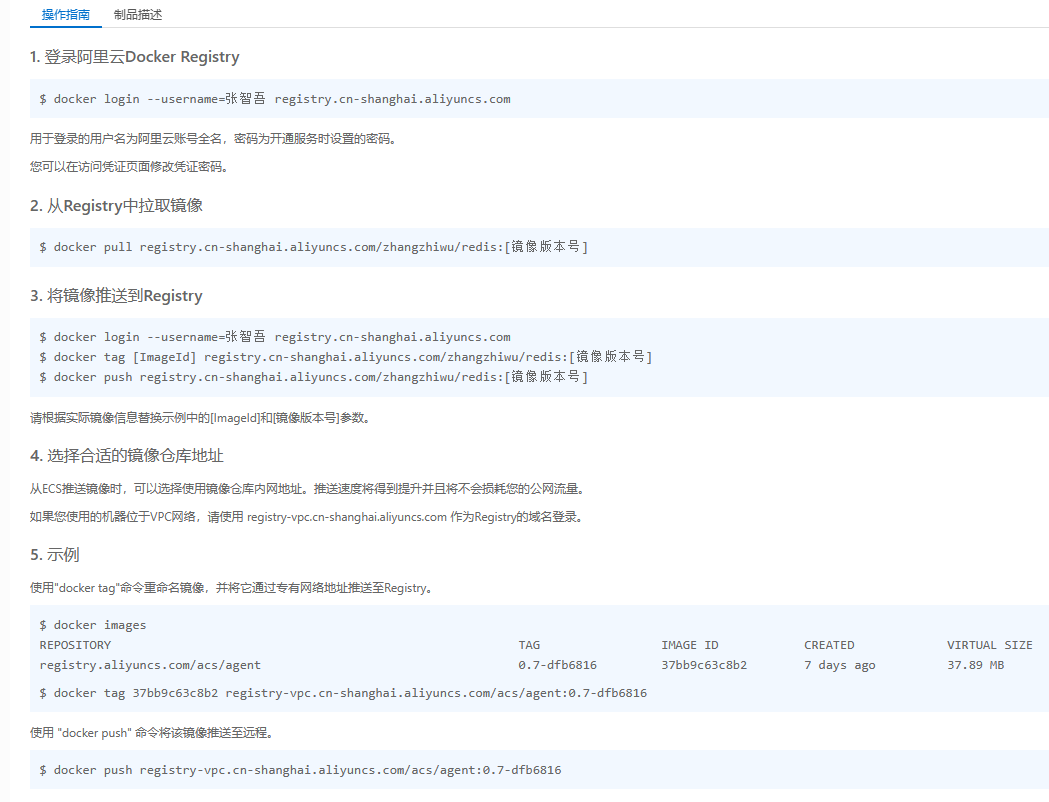

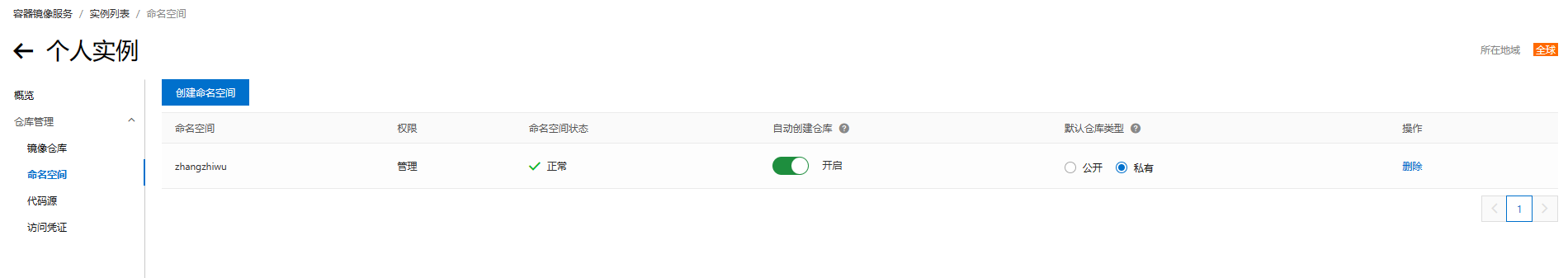

4.创建镜像仓库

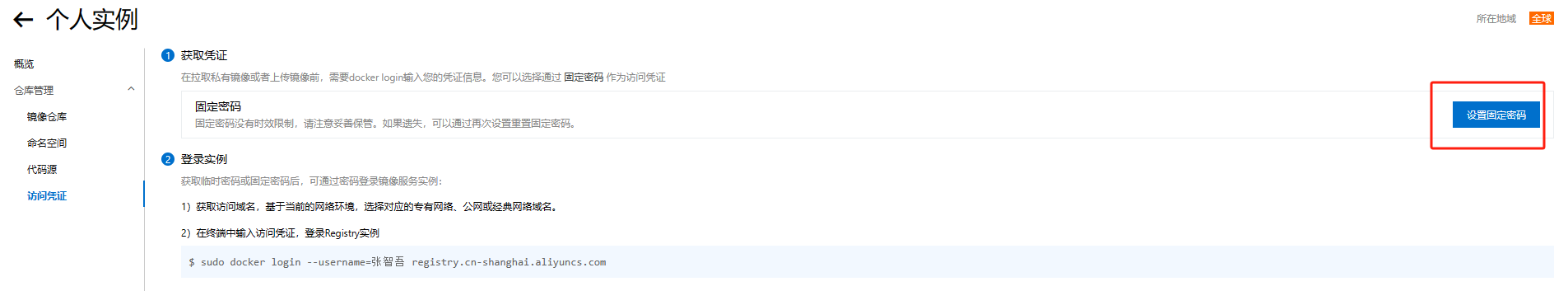

4.创建镜像仓库 5.设置访问凭证

5.设置访问凭证 6.推送到阿里云

6.推送到阿里云